We’re going to be unlocking the power of local Large Language Models (LLMs) with Jan.ai - a new piece of open source software with a friendly ChatGPT-like look. You will download and install it just like you would on any Mac / Windows / Linux system and add the language models of your choice with a few button clicks. It’s open source and you can use it offline, keeping all your chats to yourself.

Why You Might Want To Do This In The First Place

Here are some of the reasons why Jan might be for you.

- Privacy. Local LLMs keep your data on your computer, pleasing data privacy fans. Worry only about someone breaking in and stealing your stuff! So don’t worry about it and let your new AI waifu into all your incredibly 100% wholesome secrets.

- Keep your costs down. Running these things takes up a lot of compute power, so if you have a potato PC, you might be in for a bad time. But you’ll save £20+ a month by not subscribing to GPT Pro.

- No downtime. As long as your computer doesn’t blow up, you won’t have network latency issues with a local LLM.

- Customization. Experiment with different models and their settings.

Jan state that their ideal customer is “an AI enthusiast or business who has experienced some limitations with current AI solutions and is keen to find open source alternatives.”

That’s You? Great. Download The Thing

First, check the system requirements on the app’s GitHub repo.

Next, head to jan.ai and download it.

I’ll assume you know how to install software on your system. For Windows, the download file is an ‘exe’ – double click it and let it install.

Get A Model

You can find models on the app’s hub. Unfortunately the OpenAI GPTs are not available for download and require an API key, which defeats the purpose of having a local LLM. The Mistral Instruct 7B Q4 model is a good start for text summarization, classification, text completion, and code completion.

All you need to do on this screen is click on the download button for the model you want and go put the kettle on if your internet is a bit slow.

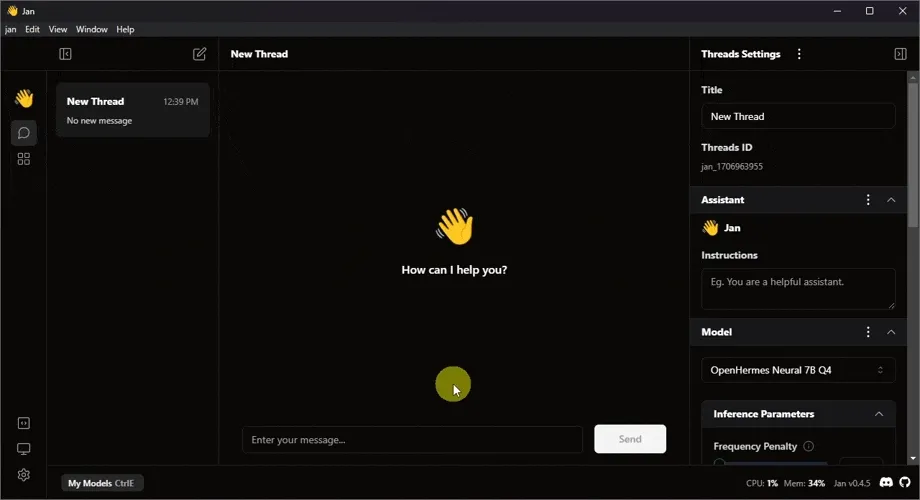

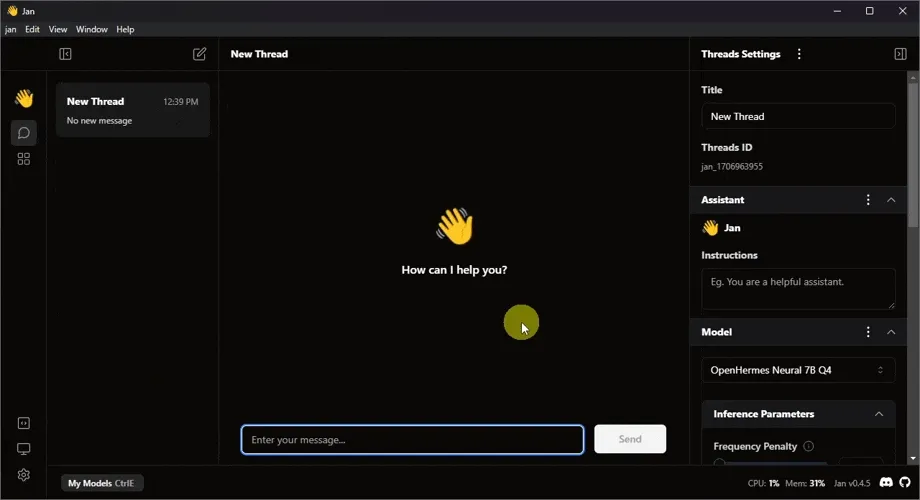

Click on the ‘Thread’ speech bubble icon to be taken back to the main page.

You can then click on the model name to switch between models or visit the hub again.

Experiment

Start chatting! You may need to wait a few seconds for the first time as models spin up, but responses should be faster afterward. Hovering over the exclamation points in the models section will give you more information on what each setting does.

Fill in the ‘Instructions’ box if you want to provide context for the chatbot. It’s a great way to introduce yourself or give the AI some background information.

Tips and Tricks

- Jan supports both CPU and GPU devices, which affect speed and quality. GPUs generally perform better but require the Nvidia CUDA Toolkit to be installed for full optimization.

- Join the Jan Discord server to chat with fellow enthusiasts and get extra help.

- Check out their docs for more information, troubleshooting tips, and updates.

- Remember to experiment with different models, prompts, and settings until you find the perfect fit for your needs.

Where Jan Keeps Your Data

By default on Windows, all your models, chat history and app data is stored in:

C:/Users/[your username]/jan

At time of writing you can change this destination by navigating to Advanced Settings > Turn on Experimental Mode > Click the pen icon next to the Jan Data Folder.

[UPDATE FROM THE FUTURE: This is no longer an ‘experimental’ setting but I was quite pleased with the GIF, so it stays]

Get Ready To Explore

This is just a basic introduction to Jan. It’s a new piece of software, so expect some missing features (a basic search function would be nice), but the team is working on it and it’s open source, so you can contribute too if you are so inclined.